#SQL Server archiving

Explore tagged Tumblr posts

Text

The Importance of Archiving SQL Server Data: Balancing Performance and Retention Needs

Introduction As a SQL Server DBA, one of the biggest challenges I’ve faced is dealing with rapidly growing history tables. I’ve seen them balloon from a few gigabytes to terabytes in size, causing all sorts of performance headaches. But at the same time, the application teams are often hesitant to archive old data due to potential requests from other teams who may need it. In this article, I’ll…

View On WordPress

0 notes

Text

With the AO3 downtime/ddos attack getting people antsy, longing for the days where we still had our fandom fic sites we could browse (or at least missing works being available in multiple locations instead of concentrated on one amazing site that unfortunately attracts bad actors and risks disappearing forever), consider setting up an efiction server!

All you need is a self-hosted website and minimal understanding of SQL (if you've successfully managed a self-hosted wordpress instance, you're probably equipped to install and manage this!).

It's been built just for such occasions, to allow people to create their own AO3s or ff.nets, and cross-posting is something we should think about getting back to in these uncertain times!

24 notes

·

View notes

Text

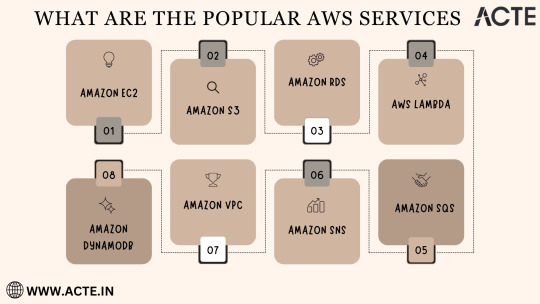

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

모듈 5

AWS 스토리지 및 데이터베이스 서비스 요약

AWS는 다양한 유형의 스토리지 및 데이터베이스 서비스를 제공하여 애플리케이션의 특정 요구 사항을 충족하도록 돕습니다.

1. 블록 스토리지

인스턴스 스토어 (Instance Store)

Amazon EC2 인스턴스에 임시 블록 수준 스토리지를 제공합니다.

EC2 인스턴스의 호스트 컴퓨터에 물리적으로 연결되어 인스턴스와 수명이 동일합니다.

인스턴스가 종료되면 데이터가 손실됩니다.

Amazon Elastic Block Store (Amazon EBS)

Amazon EC2 인스턴스에서 사용할 수 있는 영구적인 블록 수준 스토리지 볼륨을 제공합니다.

EC2 인스턴스가 중지 또는 종료되더라도 데이터를 보존합니다.

EBS 스냅샷을 생성하여 볼륨의 증분 백업을 수행할 수 있습니다. 증분 백업은 최초 백업 시 모든 데이터를 복사하고, 이후에는 변경된 데이터 블록만 저장합니다.

EBS 볼륨은 단일 가용 영역에 데이터를 저장하며, EC2 인스턴스와 동일한 가용 영역에 있어야 연결할 수 있습니다.

2. 객체 스토리지

객체 스토리지 개념

각 객체는 **데이터(파일), 메타데이터(정보), 키(고유 식별자)**로 구성됩니다.

Amazon Simple Storage Service (Amazon S3)

객체 수준 스토리지를 제공하는 서비스입니다.

데이터를 **버킷(Bucket)**에 객체로 저장합니다.

무제한의 저장 공간을 제공하며, 최대 객체 크기는 5TB입니다.

파일 업로드 시 권한을 설정하여 가시성 및 액세스를 제어할 수 있습니다.

버전 관리 기능을 통해 객체 변경 사항을 추적할 수 있습니다.

다양한 스토리지 클래스를 제공하며, 데이터 검색 빈도 및 가용성 요구 사항에 따라 선택합니다.

S3 Standard: 자주 액세스하는 데이터용. 최소 3개의 가용 영역에 저장되며 고가용성을 제공.

S3 Standard-Infrequent Access (S3 Standard-IA): 자주 액세스하지 않지만 고가용성이 필요한 데이터용. S3 Standard와 유사하지만 스토리지 비용이 저렴하고 검색 비용이 높음.

S3 One Zone-Infrequent Access (S3 One Zone-IA): 단일 가용 영역에 데이터를 저장. 스토리지 비용이 가장 저렴하지만, 가용 영역 장애 시 데이터가 손실될 수 있으므로 쉽게 재현 가능한 데이터에 적합.

S3 Intelligent-Tiering: 액세스 패턴을 알 수 없거나 자주 변하는 데이터용. 액세스 패턴을 모니터링하여 자주 액세스하지 않으면 자동으로 S3 Standard-IA로 이동시키고, 다시 액세스하면 S3 Standard로 이동.

S3 Glacier Instant Retrieval: 즉각적인 액세스가 필요한 아카이브 데이터용. 몇 밀리초 만에 객체 검색 가능.

S3 Glacier Flexible Retrieval: 데이터 보관용 저비용 스토리지. 몇 분에서 몇 시간 이내에 객체 검색.

S3 Glacier Deep Archive: 가장 저렴한 객체 스토리지 클래스로 장기 보관에 적합. 12시간 이내에 객체 검색. 3개 이상의 지리적으로 분산된 가용 영역에 복제.

S3 Outposts: 온프레미스 AWS Outposts 환경에 객체 스토리지를 제공. 데이터 근접성 및 로컬 데이터 레지던시 요구 사항이 있는 워크로드에 적합.

3. 파일 스토리지

파일 스토리지 개념

여러 클라이언트(사용자, 애플리케이션, 서버 등)가 공유 파일 폴더에 저장된 데이터에 액세스할 수 있습니다.

블록 스토리지를 로컬 파일 시스템과 함께 사용하여 파일을 구성하며, 클라이언트는 파일 경로를 통해 데이터에 액세스합니다.

많은 수의 서비스 및 리소스가 동시에 동일한 데이터에 액세스해야 하는 사용 사례에 이상적입니다.

Amazon Elastic File System (Amazon EFS)

AWS 클라우드 서비스 및 온프레미스 리소스와 함께 사용되는 확장 가능한 파일 시스템입니다.

파일 추가/제거 시 자동으로 확장 또는 축소됩니다.

리전별 서비스로, 여러 가용 영역에 걸쳐 데이터를 저장하여 고가용성을 제공합니다.

온프레미스 서버에서도 AWS Direct Connect를 통해 액세스할 수 있습니다.

4. 관계형 데이터베이스

관계형 데이터베이스 개념

데이터가 서로 관련된 방식으로 저장됩니다.

정형 쿼리 언어(SQL)를 사용하여 데이터를 저장하고 쿼리합니다.

데이터를 쉽게 이해할 수 있고 일관되며 확장 가능한 방식으로 저장합니다.

Amazon Relational Database Service (Amazon RDS)

AWS 클라우드에서 관계형 데이터베이스를 실행할 수 있는 관리형 서비스입니다.

하드웨어 프로비저닝, 데이터베이스 설정, 패치 적용, 백업 등 관리 작업을 자동화합니다.

대부분의 데이터베이스 엔진이 저장 시 암호화 및 전송 중 암호화를 제공합니다.

지원 데이터베이스 엔진: Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle Database, Microsoft SQL Server.

Amazon Aurora: 엔터프라이즈급 관계형 데이터베이스로, MySQL 및 PostgreSQL과 호환되며 표준 데이터베이스보다 최대 5배/3배 빠릅니다. 6개의 데이터 복사본을 3개의 가용 영역에 복제하고 Amazon S3에 지속적으로 백업하여 고가용성을 제공합니다.

5. 비관계형 (NoSQL) 데이터베이스

비관계형 데이터베이스 개념

행과 열이 아닌 다른 구조를 사용하여 데이터를 구성합니다. (예: 키-값 페어)

테이블의 항목에서 속성을 자유롭게 추가/제거할 수 있으며, 모든 항목에 동일한 속성이 있어야 하는 것은 아닙니다.

Amazon DynamoDB

키-값 데이터베이스 서비스입니다.

모든 규모에서 한 자릿수 밀리초의 성능을 제공합니다.

서버리스이므로 서버 프로비저닝, 패치 적용, 관리 등이 필요 없습니다.

자동 크기 조정 기능을 통해 용량 변화에 맞춰 자동으로 크기를 조정하며 일관된 성능을 유지합니다.

6. 데이터 웨어��우징 및 마이그레이션

Amazon Redshift

빅 데이터 분석에 사용되는 데이터 웨어하우징 서비스입니다.

여러 원본에서 데이터를 수집하여 관계 및 추세 파악을 돕는 기능을 제공합니다.

AWS Database Migration Service (AWS DMS)

관계형 데이터베이스, 비관계형 데이터베이스 및 기타 데이터 저장소를 마이그레이션할 수 있는 서비스입니다.

원본과 대상 데이터베이스 유형이 달라도 마이그레이션이 가능하며, 마이그레이션 중 원본 데이터베이스의 가동 중지 시간을 줄일 수 있습니다.

주요 사용 사례: 개발/테스트 데이터베이스 마이그레이션, 데이터베이스 통합, 연속 복제.

7. 추가 데이터베이스 서비스

Amazon DocumentDB: MongoDB 워크로드를 지원하는 문서 데이터베이스 서비스.

Amazon Neptune: 그래프 데이터베이스 서비스. 추천 엔진, 사기 탐지, 지식 그래프 등 고도로 연결된 데이터 세트로 작동하는 애플리케이션에 적합.

Amazon Quantum Ledger Database (Amazon QLDB): 원장 데이터베이스 서비스. 애플리케이션 데이터의 모든 변경 사항에 대한 전체 기록을 검토 가능.

Amazon Managed Blockchain: 오픈 소스 프레임워크를 사용하여 블록체인 네트워크를 생성하고 관리.

Amazon ElastiCache: 데이터베이스 위에 캐싱 계층을 추가하여 자주 사용되는 요청의 읽기 시간을 향상. Redis 및 Memcached 지원.

Amazon DynamoDB Accelerator (DAX): DynamoDB용 인 메모리 캐시. 응답 시간을 밀리초에서 마이크로초까지 향상.

0 notes

Text

The Power of Amazon Web Services (AWS): A Detailed Guide for 2025

Amazon Web Services (AWS) is the leading cloud computing platform, providing a wide range of services that empower businesses to grow, innovate, and optimize operations efficiently. With an increasing demand for cloud-based solutions, AWS has become the backbone of modern enterprises, offering high-performance computing, storage, networking, and security solutions. Whether you are an IT professional, a business owner, or an aspiring cloud architect, understanding AWS can give you a competitive edge in the technology landscape.

In this blog we will guide and explore AWS fundamentals, key services, benefits, use cases, and future trends, helping you navigate the AWS ecosystem with confidence.

What is AWS?

Amazon Web Services (AWS) is a secure cloud computing platform that provides on-demand computing resources, storage, databases, machine learning, and networking solutions. AWS eliminates the need for physical infrastructure, enabling businesses to run applications and services seamlessly in a cost-effective manner.

With over 200 fully featured services, AWS powers startups, enterprises, and government organizations worldwide. Its flexibility, scalability, and pay-as-you-go pricing model make it a preferred choice for cloud adoption.

Key AWS Services You Must Know

AWS offers a vast range of services, categorized into various domains. Below are some essential AWS services that are widely used:

1. Compute Services

Amazon EC2 (Elastic Compute Cloud): Provides resizable virtual servers for running applications.

AWS Lambda: Enables serverless computing, allowing you to run code without provisioning or managing servers.

Amazon Lightsail: A simple virtual private server (VPS) for small applications and websites.

AWS Fargate: A serverless compute engine for containerized applications.

2. Storage Services

Amazon S3 (Simple Storage Service): Object storage solution for scalable data storage.

Amazon EBS (Elastic Block Store): Persistent block storage for EC2 instances.

Amazon Glacier: Low-cost archival storage for long-term data backup.

3. Database Services

Amazon RDS (Relational Database Service): Fully managed relational databases like MySQL, PostgreSQL, and SQL Server.

Amazon DynamoDB: NoSQL database for key-value and document storage.

Amazon Redshift: Data warehousing service for big data analytics.

4. Networking and Content Delivery

Amazon VPC (Virtual Private Cloud): Provides a secure and isolated network in AWS.

Amazon Route 53: Scalable domain name system (DNS) service.

AWS CloudFront: Content delivery network (CDN) for fast and secure content delivery.

5. Security and Identity Management

AWS IAM (Identity and Access Management): Provides secure access control to AWS resources.

AWS Shield: DDoS protection for applications.

AWS WAF (Web Application Firewall): Protects applications from web threats.

6. Machine Learning & AI

Amazon SageMaker: Builds, trains, and deploys machine learning models.

Amazon Rekognition: Image and video analysis using AI.

Amazon Polly: Converts text into speech using deep learning.

Benefits of Using AWS

1. Scalability and Flexibility

AWS enables businesses to scale their infrastructure dynamically, ensuring seamless performance even during peak demand periods.

2. Cost-Effectiveness

With AWS's pay-as-you-go pricing, businesses only pay for the resources they use, reducing unnecessary expenses.

3. High Availability and Reliability

AWS operates in multiple regions and availability zones, ensuring minimal downtime and high data redundancy.

4. Enhanced Security

AWS offers advanced security features, including encryption, identity management, and compliance tools, ensuring data protection.

5. Fast Deployment

With AWS, businesses can deploy applications quickly, reducing time-to-market and accelerating innovation.

Popular Use Cases of AWS

1. Web Hosting

AWS is widely used for hosting websites and applications with services like EC2, S3, and CloudFront.

2. Big Data Analytics

Enterprises leverage AWS services like Redshift and AWS Glue for data warehousing and ETL processes.

3. DevOps and CI/CD

AWS supports DevOps practices with services like AWS CodePipeline, CodeBuild, and CodeDeploy.

4. Machine Learning and AI

Organizations use AWS AI services like SageMaker for building intelligent applications.

5. IoT Applications

AWS IoT Core enables businesses to connect and manage IoT devices securely.

Future Trends in AWS and Cloud Computing

1. Serverless Computing Expansion

More businesses are adopting AWS Lambda and Fargate for running applications without managing servers.

2. Multi-Cloud and Hybrid Cloud Adoption

AWS Outposts and AWS Hybrid Cloud solutions are bridging the gap between on-premise and cloud environments.

3. AI and Machine Learning Growth

AWS continues to enhance AI capabilities, driving innovation in automation and data processing.

4. Edge Computing Development

AWS Wavelength and AWS Local Zones will expand the reach of cloud computing to edge devices.

Conclusion

Amazon Web Services (AWS) is transforming how businesses operate in the digital era, providing unmatched scalability, security, and performance. Whether you are an enterprise looking to migrate to the cloud, a developer building applications, or a data scientist leveraging AI, AWS has a solution to your needs.

By mastering AWS, you can explore new career opportunities and drive business innovation. Start your AWS journey today and explore the limitless possibilities of cloud computing.

0 notes

Text

One of the oldest forms of internet communication, message boards and forums are just as popular today as they have ever been. As of 2016, there were an estimated 110,000 separate forum and message board providers across the world, ranging from social media style sites like Reddit to free bulletin software built on phpBB. Market Share Of Various Popular Forum Platforms Studies show that premium (paid-for) forum software still takes the lead when it comes to setting up a discussion website. In fact, leading premium provider vBulletin takes an estimated 50% of the total market. This service is essentially a template site with fully customizable bulletin board platforms, available for developers to manipulate and innovate as desired. [Credit: BuiltWith.com via Quora] However, there has been a marked growth in the amount of open source software which enables discussion board creation, and many developers are creating their own message boards for commercial, social and personal use. We take a look at the top free platforms on the market today, tried and tested by industry experts. Five Of The Best Free Forum Platforms phpBB Experts agree that you will struggle to beat phpBB for functionality and ease of use. This simple PHP bulletin board application is perfect for fan discussions, photo threads, advice pages and general messaging between users. It has millions of global users and is fully compatible with PostgreSQL, SQLite, MySQL, Microsoft SQL Server, as well as being open to editing through the General Public Licence There are lots of opportunities to play with it and customize it, with no need for seeking permission first. Threads can be split and messages can be archived for posterity, and the site maintains a template database with modifications and styles that are open for users to play around with. bbPress Forums WordPress has been the leading platform for blogging for many years. There are millions of sites across the Web which use the publishing software for their business sites, blogs and even e-commerce - and now the team is adding forum sites to its range of products. bbPress Forums is a WordPress-style bulletin board creation tool, which has the smooth and seamless transitions and roomy capacity that keeps the site fast and user-friendly. Just like with the blogs, bbPress begins as a free service and has the option of a Premium or Business upgrade, with additional custom features and templates for subscribers. It is also one of the most secure free programs, with additional safety features to prevent hacking. YetAnotherForum Don’t let the name fool you - YetAnotherForum is anything but, with its quirky and innovative approach to forum development. The managed open source platform is designed specifically with ASP.NET in mind and is compatible with the fourth C# generation and above. It is licensed free to developers and open for reworkings of the system’s code, and it builds bright, bold message boards that are simple and easy for users and admins alike. One of the big selling points is YetAnotherForum’s reliability: the service has operated for nine years without problems and still undergoes regular testing. Over those nine years, progress has been slow but the last twelve months have seen promising developments and the program is seeing a surge in downloads and orders. Phorum Sometimes the old ones really are the best. Phorum has been around since the 1990s and it played a big role in the rise of forum sites. The free-to-use platform is based around PHP and MySQL development, and it is still one of the best pieces of software for forum creation that you can use free today. One of the key selling points is how flexible it is - you can host one huge message thread with thousands of users, or adopt it across hundreds of different forums for a help site, social network or discussion group. Phorum has also upped its game lately with the introduction of a new template - the XHTML 1.0 Transitional Emerald design.

This is available instead of the basic template and has far more features and options, but it still offers a blank slate for the developer in terms of design and function. Discourse If you need a platform that scales up as your service grows and is easy to develop and change as you do, Discourse is a great choice. There are three tiers of membership available - free, paid and premium - and all options are pretty budget-friendly. Discourse is designed for creating discussion boards, and it is fully compatible with mobiles and tablets as well as PCs and laptops. It can handle busy conversations and it makes them easy to read back, with options to split threads and move conversations as needed. The open source code is readily available for programmers to play with, and the system is easy to hand over to your designer or tech team if you have something specific in mind. User-created templates and styles are available, and there is a built-in filter system with can save on moderator costs and keep your forum a safe space for visitors to chat. Ten More Top Free Forum And Bulletin Board Programs Haven’t found what you need among our top picks above? Not all programs suit everyone - but there are hundreds of options on the market, so keep searching for your perfect platform. Find the right match for your forum vision, and get creating thanks to these amazing open source programs. Here are some more of the best forum development programs for 2017: Vanilla Forums Simple Machines MiniBB Forums MyBB DeluxeBB PunBB FluxBB UseBB Zetaboards Plush Forums The Best Of Premium Forum Software - At A Discount! If the free tools do not meet your needs or you want the added support and security of a premium service, there are plenty of platforms to choose from - including vBulletin, Burning Board, XenForo and IP.Board. Paid-for tools often require a subscription, so take advantage of the deals available online at OZCodes.com.au. Voucher codes can give big savings on the cost of software subscriptions through leading providers.

0 notes

Text

A DBA's Checklist for Decommissioning a SQL Server

As a DBA, one of the more stressful tasks that occasionally lands on your plate is having to decommission a SQL Server. Maybe the hardware is being retired, or databases are being consolidated onto fewer servers. Whatever the reason, it’s a delicate process that requires careful planning and execution to avoid data loss or business disruption. I still remember the first time I had to…

View On WordPress

0 notes

Text

aws cloud,

aws cloud,

Amazon Web Services (AWS) is one of the leading cloud computing platforms, offering a wide range of services that enable businesses, developers, and organizations to build and scale applications efficiently. AWS provides cloud solutions that are flexible, scalable, and cost-effective, making it a popular choice for enterprises and startups alike.

Key Features of AWS Cloud

AWS offers an extensive range of features that cater to various computing needs. Some of the most notable features include:

Scalability and Flexibility – AWS allows businesses to scale their resources up or down based on demand, ensuring optimal performance without unnecessary costs.

Security and Compliance – With robust security measures, AWS ensures data protection through encryption, identity management, and compliance with industry standards.

Cost-Effectiveness – AWS follows a pay-as-you-go pricing model, reducing upfront capital expenses and providing cost transparency.

Global Infrastructure – AWS operates data centers worldwide, offering low-latency performance and high availability.

Wide Range of Services – AWS provides a variety of services, including computing, storage, databases, machine learning, and analytics.

Popular AWS Services

AWS offers numerous services across various categories. Some of the most widely used services include:

1. Compute Services

Amazon EC2 (Elastic Compute Cloud) – Virtual servers for running applications.

AWS Lambda – Serverless computing that runs code in response to events.

2. Storage Services

Amazon S3 (Simple Storage Service) – Object storage for data backup and archiving.

Amazon EBS (Elastic Block Store) – Persistent block storage for EC2 instances.

3. Database Services

Amazon RDS (Relational Database Service) – Managed relational databases like MySQL, PostgreSQL, and SQL Server.

Amazon DynamoDB – A fully managed NoSQL database for fast and flexible data access.

4. Networking & Content Delivery

Amazon VPC (Virtual Private Cloud) – Secure cloud networking.

Amazon CloudFront – Content delivery network for faster content distribution.

5. Machine Learning & AI

Amazon SageMaker – A fully managed service for building and deploying machine learning models.

AWS AI Services – Includes tools like Amazon Rekognition (image analysis) and Amazon Polly (text-to-speech).

Benefits of Using AWS Cloud

Organizations and developers prefer AWS for multiple reasons:

High Availability – AWS ensures minimal downtime with multiple data centers and redundant infrastructure.

Enhanced Security – AWS follows best security practices, including data encryption, DDoS protection, and identity management.

Speed and Agility – With AWS, businesses can deploy applications rapidly and scale effortlessly.

Cost Savings – The pay-as-you-go model reduces IT infrastructure costs and optimizes resource allocation.

Getting Started with AWS

If you are new to AWS, follow these steps to get started:

Create an AWS Account – Sign up on the AWS website.

Choose a Service – Identify the AWS services that suit your needs.

Learn AWS Basics – Use AWS tutorials, documentation, and training courses.

Deploy Applications – Start small with free-tier resources and gradually scale.

Conclusion

AWS Cloud is a powerful and reliable platform that empowers businesses with cutting-edge technology. Whether you need computing power, storage, networking, or machine learning, AWS provides a vast ecosystem of services to meet diverse requirements. With its scalability, security, and cost efficiency, AWS continues to be a top choice for cloud computing solutions.

0 notes

Text

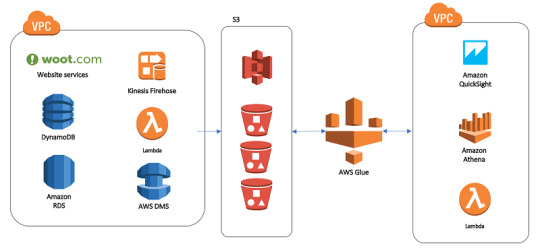

Best Practices for a Smooth Data Warehouse Migration to Amazon Redshift

In the era of big data, many organizations find themselves outgrowing traditional on-premise data warehouses. Moving to a scalable, cloud-based solution like Amazon Redshift is an attractive solution for companies looking to improve performance, cut costs, and gain flexibility in their data operations. However, data warehouse migration to AWS, particularly to Amazon Redshift, can be complex, involving careful planning and precise execution to ensure a smooth transition. In this article, we’ll explore best practices for a seamless Redshift migration, covering essential steps from planning to optimization.

1. Establish Clear Objectives for Migration

Before diving into the technical process, it’s essential to define clear objectives for your data warehouse migration to AWS. Are you primarily looking to improve performance, reduce operational costs, or increase scalability? Understanding the ‘why’ behind your migration will help guide the entire process, from the tools you select to the migration approach.

For instance, if your main goal is to reduce costs, you’ll want to explore Amazon Redshift’s pay-as-you-go model or even Reserved Instances for predictable workloads. On the other hand, if performance is your focus, configuring the right nodes and optimizing queries will become a priority.

2. Assess and Prepare Your Data

Data assessment is a critical step in ensuring that your Redshift data warehouse can support your needs post-migration. Start by categorizing your data to determine what should be migrated and what can be archived or discarded. AWS provides tools like the AWS Schema Conversion Tool (SCT), which helps assess and convert your existing data schema for compatibility with Amazon Redshift.

For structured data that fits into Redshift’s SQL-based architecture, SCT can automatically convert schema from various sources, including Oracle and SQL Server, into a Redshift-compatible format. However, data with more complex structures might require custom ETL (Extract, Transform, Load) processes to maintain data integrity.

3. Choose the Right Migration Strategy

Amazon Redshift offers several migration strategies, each suited to different scenarios:

Lift and Shift: This approach involves migrating your data with minimal adjustments. It’s quick but may require optimization post-migration to achieve the best performance.

Re-architecting for Redshift: This strategy involves redesigning data models to leverage Redshift’s capabilities, such as columnar storage and distribution keys. Although more complex, it ensures optimal performance and scalability.

Hybrid Migration: In some cases, you may choose to keep certain workloads on-premises while migrating only specific data to Redshift. This strategy can help reduce risk and maintain critical workloads while testing Redshift’s performance.

Each strategy has its pros and cons, and selecting the best one depends on your unique business needs and resources. For a fast-tracked, low-cost migration, lift-and-shift works well, while those seeking high-performance gains should consider re-architecting.

4. Leverage Amazon’s Native Tools

Amazon Redshift provides a suite of tools that streamline and enhance the migration process:

AWS Database Migration Service (DMS): This service facilitates seamless data migration by enabling continuous data replication with minimal downtime. It’s particularly helpful for organizations that need to keep their data warehouse running during migration.

AWS Glue: Glue is a serverless data integration service that can help you prepare, transform, and load data into Redshift. It’s particularly valuable when dealing with unstructured or semi-structured data that needs to be transformed before migrating.

Using these tools allows for a smoother, more efficient migration while reducing the risk of data inconsistencies and downtime.

5. Optimize for Performance on Amazon Redshift

Once the migration is complete, it’s essential to take advantage of Redshift’s optimization features:

Use Sort and Distribution Keys: Redshift relies on distribution keys to define how data is stored across nodes. Selecting the right key can significantly improve query performance. Sort keys, on the other hand, help speed up query execution by reducing disk I/O.

Analyze and Tune Queries: Post-migration, analyze your queries to identify potential bottlenecks. Redshift’s query optimizer can help tune performance based on your specific workloads, reducing processing time for complex queries.

Compression and Encoding: Amazon Redshift offers automatic compression, reducing the size of your data and enhancing performance. Using columnar storage, Redshift efficiently compresses data, so be sure to implement optimal compression settings to save storage costs and boost query speed.

6. Plan for Security and Compliance

Data security and regulatory compliance are top priorities when migrating sensitive data to the cloud. Amazon Redshift includes various security features such as:

Data Encryption: Use encryption options, including encryption at rest using AWS Key Management Service (KMS) and encryption in transit with SSL, to protect your data during migration and beyond.

Access Control: Amazon Redshift supports AWS Identity and Access Management (IAM) roles, allowing you to define user permissions precisely, ensuring that only authorized personnel can access sensitive data.

Audit Logging: Redshift’s logging features provide transparency and traceability, allowing you to monitor all actions taken on your data warehouse. This helps meet compliance requirements and secures sensitive information.

7. Monitor and Adjust Post-Migration

Once the migration is complete, establish a monitoring routine to track the performance and health of your Redshift data warehouse. Amazon Redshift offers built-in monitoring features through Amazon CloudWatch, which can alert you to anomalies and allow for quick adjustments.

Additionally, be prepared to make adjustments as you observe user patterns and workloads. Regularly review your queries, data loads, and performance metrics, fine-tuning configurations as needed to maintain optimal performance.

Final Thoughts: Migrating to Amazon Redshift with Confidence

Migrating your data warehouse to Amazon Redshift can bring substantial advantages, but it requires careful planning, robust tools, and continuous optimization to unlock its full potential. By defining clear objectives, preparing your data, selecting the right migration strategy, and optimizing for performance, you can ensure a seamless transition to Redshift. Leveraging Amazon’s suite of tools and Redshift’s powerful features will empower your team to harness the full potential of a cloud-based data warehouse, boosting scalability, performance, and cost-efficiency.

Whether your goal is improved analytics or lower operating costs, following these best practices will help you make the most of your Amazon Redshift data warehouse, enabling your organization to thrive in a data-driven world.

#data warehouse migration to aws#redshift data warehouse#amazon redshift data warehouse#redshift migration#data warehouse to aws migration#data warehouse#aws migration

0 notes

Text

SQL Script for parameter changes for Dataguard setup in Oracle

Step-by-Step Primary and Standby Database Configuration Primary Server Configuration (primary_config.sql) in Oracle Dataguard Setup: Primary Server Configuration (primary_config.sql) -- Set the database to archive log mode SHUTDOWN IMMEDIATE; STARTUP MOUNT; ALTER DATABASE ARCHIVELOG; ALTER DATABASE OPEN; -- Enable force logging ALTER DATABASE FORCE LOGGING; -- Set the primary database…

0 notes

Text

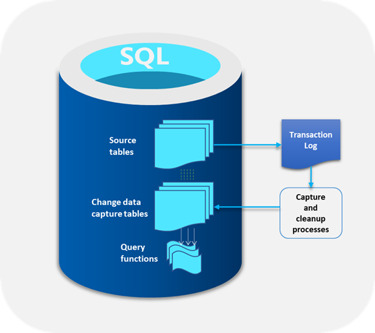

The Evolution and Functioning of SQL Server Change Data Capture (CDC)

The SQL Server CDC feature was introduced by Microsoft in 2005 with advanced “after update”, “after insert”, and “after delete” functions. Since the first version did not meet user expectations, another version of SQL Server CDC was launched in 2008 and was well received. No additional activities were required to capture and archive historical data and this form of this feature is in use even today.

Functioning of SQL Server CDC

The main function of SQL Server CDC is to capture changes in a database and present them to users in a simple relational format. These changes include insert, update, and delete of data. All metrics required to capture changes to the target database like column information and metadata are available for the modified rows. These changes are stored in tables that mirror the structure of the tracked stored tables.

One of the main benefits of SQL Server CDC is that there is no need to continuously refresh the source tables where the data changes are made. Instead, SQL Server CDC makes sure that there is a steady stream of change data with users moving them to the appropriate target databases.

Types of SQL Server CDC

There are two types of SQL Server CDC

Log-based SQL Server CDC where changes in the source database are shown in the system through the transaction log and file which are then moved to the target database.

Trigger-based SQL Server CDC where triggers placed in the database are automatically set off whenever a change occurs, thereby lowering the costs of extracting the changes.

Summing up, SQL Server Change Data Capture has optimized the use of the CDC feature for businesses.

0 notes

Text

Effective Oracle Server Maintenance: A Guide by Spectra Technologies Inc

Organizations in today's time rely heavily on robust database management systems to store, retrieve, and manage data efficiently. Oracle databases stand out due to their performance, reliability, and comprehensive features. However, maintaining these databases is crucial for ensuring optimal performance and minimizing downtime. At Spectra Technologies Inc., we understand the importance of effective Oracle server maintenance, and we are committed to providing organizations with the tools and strategies they need to succeed.

Importance of Regular Maintenance

Regular maintenance of Oracle servers is essential for several reasons:

Performance Optimization: Over time, databases can become cluttered with unnecessary data, leading to slower performance. Regular maintenance helps to optimize queries, improve response times, and ensure that resources are utilized efficiently.

2. Security: With the rise in cyber threats, Oracle server maintenance and maintaining the security of your oracle database is paramount. Regular updates and patches protect against vulnerabilities and ensure compliance with industry regulations.

3. Data Integrity: Regular checks and repairs help maintain the integrity of the data stored within the database. Corrupted data can lead to significant business losses and a tarnished reputation.

4. Backup and Recovery: Regular maintenance includes routine backups, which are vital for disaster recovery. Having a reliable backup strategy in place ensures that your data can be restored quickly in case of hardware failure or data loss.

5. Cost Efficiency: Proactive maintenance can help identify potential issues before they escalate into costly problems. By investing in regular upkeep, organizations can save money in the long run.

Key Maintenance Tasks

To ensure optimal performance of your Oracle server, several key maintenance tasks should be performed regularly:

1. Monitoring and Performance Tuning

Continuous monitoring of the database performance is crucial. Tools like Oracle Enterprise Manager can help track performance metrics and identify bottlenecks. Regularly analyzing query performance and executing SQL tuning can significantly enhance response times and overall efficiency.

2. Database Backup

Implement a robust backup strategy that includes full, incremental, and differential backups. Oracle Recovery Manager (RMAN) is a powerful tool that automates the backup and recovery process. Test your backup strategy regularly to ensure data can be restored quickly and accurately.

3. Patch Management

Stay updated with Oracle’s latest patches and updates. Regularly applying these patches helps close security vulnerabilities and improves system stability. Establish a patch management schedule to ensure that your database remains secure.

4. Data Purging

Regularly purging obsolete or unnecessary data can help maintain the database’s performance. Identify and remove old records that are no longer needed, and consider archiving historical data to improve access speed.

5. Index Maintenance

Indexes play a crucial role in speeding up query performance. Regularly monitor and rebuild fragmented indexes to ensure that your queries run as efficiently as possible. Automated tools can help manage indexing without manual intervention.

6. User Management

Regularly review user access rights and roles to ensure that only authorized personnel have access to sensitive data. Implementing strong user management practices helps enhance security and data integrity.

7. Health Checks

Conduct regular health checks of your Oracle database. This includes checking for corrupted files, validating data integrity, and ensuring that the system is operating within its capacity. Health checks can help preemptively identify issues before they become critical.

Conclusion

Oracle server maintenance is not just a technical necessity; it is a strategic approach to ensuring that your organization can operate smoothly and efficiently in a data-driven world. At Spectra Technologies Inc, we offer comprehensive Oracle database management services tailored to meet the unique needs of your organization. By partnering with us, you can rest assured that your Oracle server will remain secure, efficient, and resilient.

Investing in regular maintenance is investing in the future success of your business. Reach out to Spectra Technologies Inc. today to learn more about how we can help you optimize your Oracle database management and ensure seamless operations.

0 notes

Text

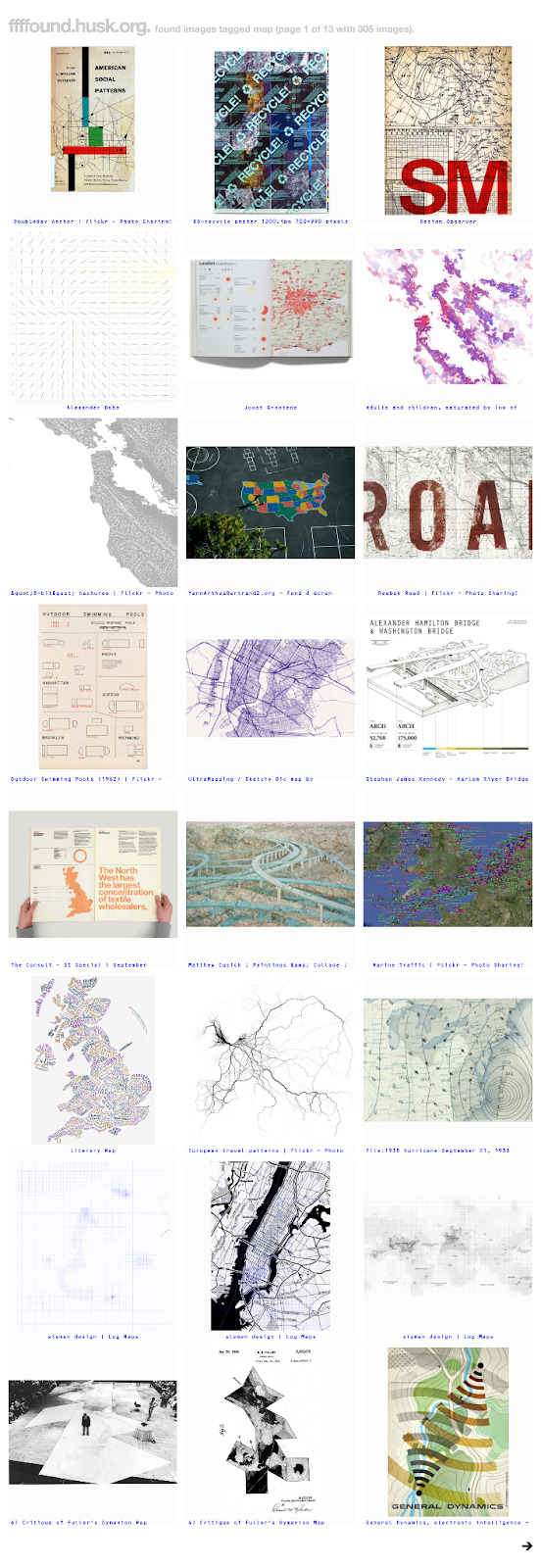

Automatic image tagging with Gemini AI

I used multimodal generative AI to tag my archive of 2,500 unsorted images. It was surprisingly effective.

I’m a digital packrat. Disk space is cheap, so why not save everything? That goes double for things out on the internet, especially those on third party servers, where you can’t be sure they’ll live forever. One of the sites that hasn’t lasted is ffffound!, a pioneering image bookmarking website, which I was lucky enough to be a member of.

Back around 2013 I wrote a quick Ruby web scraper to download my images, and ever since I’ve wondered what to do with the 2,500 or so images. ffffound was deliberately minimal - you got only the URL of the site it was saved from and a page title - so organising them seemed daunting.

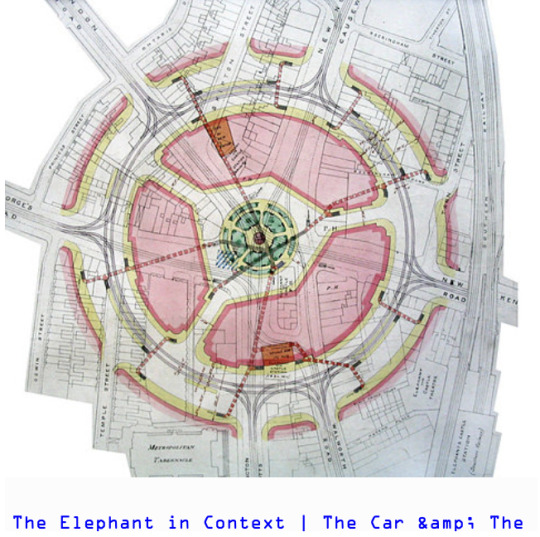

A little preview- this is what got pulled out tagged "maps".

The power of AI compels you!

As time went on, I thought about using machine learning to write tags or descriptions, but the process back then involved setting up models, training them yourself, and it all seemed like a lot of work. It's a lot less work now. AI models are cheap (or at least, for the end user, subsidised) and easy to access via APIs, even for multimodal queries.

After some promising quick explorations, I decided to use Google’s Gemini API to try tagging the images, mainly because they already had my billing details in Google Cloud and enabling the service was really easy.

Prototyping and scripting

My usual prototyping flow is opening an iPython shell and going through tutorials; of course, there’s one for Gemini, so I skipped to “Generate text from image and text inputs”, replaced their example image with one of mine, tweaked the prompt - ending up with ‘Generate ten unique, one to three word long, tags for this image. Output them as comma separated, without any additional text’ - and was up and running.

With that working, I moved instead to writing a script. Using the code from the interactive session as a core, I wrapped it in some loops, added a little SQL to persist the tags alongside the images in an existing database, and set it off by passing in a list of files on the command line. (The last step meant I could go from running it on the six files matching images/00\*.jpg up to everything without tweaking the code.) Occasionally it hit rather baffling errors, which weren’t well explained in the tutorial - I’ll cover how I handled them in a follow up post.

You can see the resulting script on GitHub. Running it over the entire set of images took a little while - I think the processing time was a few seconds per image, so I did a few runs of maybe an hour each to get all of them - but it was definitely much quicker than tagging by hand. Were the tags any good, though?

Exploring the results

I coded up a nice web interface so I was able to surf around tags. Using that, I could see what the results were. On the whole? Between fine and great. For example, it turns out I really like maps, with 308 of the 2,580 or so images ending up with the tag ‘map’ which are almost all, if not actual maps, do at least look cartographic in some way.

The vast majority of the most common tags I ended up with were the same way - the tag was generally applicable to all of the images in some way, even if it wasn’t totally obvious at first why. However, it definitely wasn’t perfect. One mistake I noticed was this diagram of roads tagged “rail” - and yet, I can see how a human would have done the same.

Another small criticism? There was a lack of consistency across tags. I can think of a few solutions, including resubmitting the images as a group, making the script batch images together, or adding the most common tags to the prompt so the model can re-use them. (This is probably also a good point to note it might also be interesting to compare results with other multimodal models.)

Finally, there were some odd edge cases to do with colour. I can see why most of these images are tagged ‘red’, but why is the telephone box there? While there do turn out to be specks of red in the diagram at the bottom right, I’d also go with “black and white” myself over “black”, “white”, and “red” as distinct tags.

Worth doing?

On the whole, though, I think this experiment was pretty much a success. Tagging the images cost around 25¢ (US) in API usage, took a lot less time than doing so manually, and nudged me into exploring and re-sharing the archive. If you have a similar library, I’d recommend giving this sort of approach a try.

1 note

·

View note

Text

Compute Engine’s latest N4 and C4 VMs boosts performance

Customers’ workloads are diverse, consisting of multiple services and components with distinct technical requirements. A uniform approach hinders their ability to scale while attempting to maintain cost and performance equilibrium.

In light of this, Google cloud introduced the C4 and N4 machine series, two new additions to their general-purpose virtual machine portfolio, at Google Cloud Next ’24 last month. The first machine series from a major cloud provider, the C4 and N4, are driven by Google’s Titanium, a system of specially designed micro controllers and tiered scale-out offloads, and make use of the newest 5th generation Intel Xeon processors (code-named Emerald Rapids).

C4 and N4 provide the best possible combination of cost-effectiveness and performance for a wide range of general-purpose workload requirements. While N4’s price-performance gains and flexible configurations, such as extended memory and custom shapes, let you choose different combinations of compute and memory to optimise costs and reduce resource waste for the rest of your workloads, C4 tackles demanding workloads with industry-leading performance.

C4 and N4 are built to satisfy all of your general-purpose computing needs, regardless of whether your workload demands constant performance for mission-critical operations or places a higher priority on adaptability and cost optimisation. The C4 machine series is currently available in preview for Compute Engine and Google Kubernetes Engine (GKE), while the N4 machine series is currently available broadly.

N4: Flexible forms and price-performance gains

The N4 machine series has an efficient architecture with streamlined features, forms, and next-generation Dynamic Resource Management, all designed from the bottom up for price-performance advantages and cost optimisation. With up to 18% better price-performance than N2 instances and up to 70% better price-performance than instances, N4 helps you reduce your total cost of ownership (TCO) for a variety of workloads as compared to prior generation N-Family instances. N4 offers up to 39% better price-performance for My SQL workloads and up to 75% better price-performance for Java applications as compared to N2 across key workloads.Image credit to Google cloud

Additionally, N4 offers you the most flexibility possible to accommodate changing workload requirements with bespoke shapes that provide you with fine-grained resource control. Custom shapes add a cost optimisation lever that is now exclusive to Google Cloud by letting you only pay for what you use, preventing you from overspending on memory or vCPU. Additionally, custom shapes provide seamless, reconfiguration-free migrations from on-premises to the cloud or from another cloud provider to Google, as well as optimisation for special workload patterns with unusual resource requirements.

In order to support a wider range of applications, N4 additionally offers 4x more preconfigured shapes at significantly bigger sizes up to 80 vCPUs and 640GB of DDR5 RAM as compared to similar offerings from other top cloud providers. Predefined forms for N4 are offered in three configurations: regular (4GB/vCPU), high-mem (8GB/vCPU), and high-cpu (2GB/vCPU).

In comparison to other cloud providers, N4 delivers up to 4 times the usual networking speed (50 Gbps standard) and up to 160K IOPS with Hyperdisk Balanced. Most general-purpose workloads, including medium-traffic web and application servers, dev/test environments, virtual desktops, micro services, business intelligence applications, batch processing, data analytics, storage and archiving, and CRM applications, are well-suited for N4. These workloads require a balance between performance and cost-efficiency.

Dynamic Resource Management

On N4 and Titanium, Google’s next-generation Dynamic Resource Management (DRM) improves upon current optimisation techniques to deliver a greater price-performance, reduce expenses, and support a wider range of applications.

Google cloud worldwide infrastructure is supported by a Google-only technology called dynamic resource management, which handles workloads for YouTube, Ads, and Search. The most recent iteration of dynamic resource management uses Titanium to precisely forecast and consistently deliver the necessary performance for your workloads. Dynamic resource management on N4 offers shown dependability, efficiency, and performance at scale.

C4: Cutting-edge features and performance in the industry

C4 is designed to handle your most demanding workloads. It comes with the newest generation of compute and memory, Titanium network and storage offloads, and enhanced performance and maintenance capabilities. When compared to similar services from other top cloud providers, C4 offers up to 20% better price-performance; when compared to the previous generation C3 VM, C4 offers up to 25% better price-performance across important workloads.Image Credit to Google cloud

In addition, C4’s Titanium technology provides up to 80% greater CPU responsiveness than prior generations for real-time applications like gaming and high-frequency trading, which leads to quicker trades and a more fluid gaming experience12. Demanding databases and caches, network appliances, high-traffic web and application servers, online gaming, analytics, media streaming, and real-time CPU-based inference with Intel AMX are all excellent fits for the high performance of C4.

Additionally, C4 transfers processing for virtual storage to the Titanium adapter, which enhances infrastructure stability, security, performance, and lifecycle management. Titanium enables C4 to provide scalable, high-performance I/O with up to 500k IOPS, 10 GB/s throughput on Hyperdisk, and networking bandwidth of up to 200 Gbps13.

Better maintenance controls are another feature that C4 provides, allowing for less frequent and disruptive planned maintenance with increased predictability and control. C4 instances can support up to 1.5TB of DDR5 RAM and 192 vCPUs. Standard, high-memory, and high-processor variants are offered. Customers of Compute Engine and Google Kubernetes Engine (GKE) can now access C4 in Preview.

N4 and C4 work well together

They are aware that choosing the ideal virtual machine is critical to your success. You can reduce your overall operating costs without sacrificing performance or workload-specific needs when you use C4 and N4 to handle all of your general-purpose tasks.

Utilise virtual machines (VMs) that expand with your company. Combining C4 and N4 offers you flexible options to mix and combine machine instances according to your various workloads, along with affordable solutions that put performance and reliability first.

Availability

Us-east1 (South Carolina), Us-east4 (Virginia), Us-central1 (Iowa), Europe-west1 (Belgium), Europe-west4 (Netherlands), and Asia-southeast1 (Singapore) currently have widespread access to N4. Customers of Google Kubernetes Engine (GKE) and Compute Engine can now access C4 in Preview.

Read more on Govindhtech.com

0 notes